AI Safety and Security Need More Funders

Editor’s note: This article was published under our former name, Open Philanthropy.

Introduction

Leading AI systems outperform human experts in virology tasks relevant to creating novel pathogens and show signs of deceptive behavior. Many experts predict that these systems will become smarter-than-human in the next decade. But efforts to mitigate the risks remain profoundly underfunded. In this post, we argue that now is a uniquely high-impact moment for new philanthropic funders to enter the field of AI safety and security.

We cover:

- Why more philanthropic funders are needed now: Additional funders can help build a more effective coalition behind AI safety and security; back areas and organizations that Good Ventures (our largest funding partner) is not well-positioned to support; and increase the total amount of funding in this space, which we think is still too low. Because of these factors, we are typically able to recommend giving opportunities to external funders that are 2-5x as cost-effective as Good Ventures’ marginal AI safety funding. (More)

- Examples of previous philanthropic wins in AI safety and security: Our experience over 10 years of grantmaking in this space shows that well-targeted philanthropy can meaningfully reduce worst-case risks from advanced AI. We discuss several examples across our three investment pillars: visibility, safeguards, and capacity. (More)

- How other funders can get involved: We help new funders reduce the time required to find high-impact philanthropic opportunities in the field by developing custom portfolios of grant recommendations that fit each donor’s interests and preferences. This includes connecting them with other leading experts and advisors, offering support to evaluate giving opportunities, and sourcing co-funding opportunities. (More)

This is the third in a three-part series on our approach to safety, progress, and AI. The first covered why we fund scientific and technological progress while also funding work to reduce risks from emerging technologies like AI. The second described our grantmaking approach for AI safety and security.

We’ve long thought that AI could bring enormous benefits to society, while also being convinced by the theoretical argument that advanced AI could pose catastrophic risks. Increasingly, we’re seeing those theoretical arguments supported by empirical evidence. GPT-5, the most recent AI model from OpenAI, reached the company’s internal “High” risk threshold for biological and chemical misuse. Other models have surreptitiously rewritten their code or attempted to blackmail their designers in artificial testing environments. These dangers could grow: a recent survey of domain experts and superforecasters estimated that if an AI system matched top virologists on certain troubleshooting tests, the annual risk of a human-caused epidemic would increase fivefold by 2028. Just months after the survey, frontier models reached some of those capabilities.

These developments leave a narrow window of opportunity for philanthropic funding to make a leveraged difference. There are several reasons we think additional funders can currently have outsized impact now:

First, there are outstanding grantmaking opportunities that fall outside of Good Ventures’ scope. While our priorities are similar to Good Ventures’, we sometimes identify outstanding opportunities that they opt not to pursue, which leaves them open for other funders. The contours of those opportunities have gradually shifted over time, but as one example, some conservative groups that might do influential and helpful work on AI policy may not be a good fit for Good Ventures.

Second, many of the most promising organizations, especially in policy and governance, need a more diverse funder base to achieve their goals. Money is less fungible in policy work than in other causes (like delivering direct services) because a group’s funding sources impact its positioning and influence in different ways. Policy advocates benefit from identifiable funding sources that fit their local and political context — e.g. right-of-center groups benefit from identifiably right-of-center funders, and a group working on European policy benefits from having identifiably European funders. As a result, there are outsized benefits from having a diverse array of funders to match the distinctive needs of certain groups, and Open Philanthropy’s work in this space typically involves advising other donors on their independent giving rather than creating centralized pooled funds. Many of our top grantees also want to ensure that only a minority of their funding comes from a single source like Good Ventures. This target funding ratio can create implicit “matches” where $1 from another funder unlocks ~$1 from Good Ventures. We’ve also occasionally offered formal 1:1 matches to encourage grantees to diversify their donors. If you’re giving over $250,000/year in AI safety and are interested in hearing about matching opportunities like these, please reach out to us at partnerwithus@coefficientgiving.org.

Third, Open Philanthropy and Good Ventures are currently a concentrated share of AI safety philanthropic funding, and others can correct our blind spots and make new bets. It’s very likely that there are high-impact funding gaps that other philanthropists could fill. Historically, there have been cases where we wished other funders had taken different bets from ours. For instance, as we noted in our 2024 annual review, we were slow to act on the view that advances in AI capabilities were increasing the returns to technical safety work, and we are now playing catch-up; we’re glad that the AI Safety Tactical Opportunities Fund (AISTOF) existed before and was doing some independent funding in that space before we scaled up this year. AISTOF and Longview Philanthropy both run funds and advise donors interested in AI safety independently of Open Philanthropy, and we’re glad to have more independent advisory capacity like that in the space.

Fourth, AI safety and security as a whole remains underfunded relative to the scale of the risks, so adding funding to known opportunities is still very impactful. AI safety and security currently receives much less philanthropic funding than some other societal-scale risks. Philanthropic funding for climate risk mitigation, for instance, was ~$9-15 billion in 2023 (the most recent data available), which we estimate to be ~20x as much as philanthropic funding for AI safety and security in 2024.

Because of these factors, when external funders come to us for advice on giving, we are typically able to recommend funding opportunities that we believe are 2-5x as cost-effective as Good Ventures’ marginal AI safety funding.

The previous section argued that additional funders have highly leveraged opportunities to make a difference in AI safety and security. In this section, we provide examples from our work over the last ten years that show how well-targeted philanthropy can move the needle on reducing worst-case risks from advanced AI.

First, philanthropy can help increase visibility into AI capabilities and risks, enabling society to better navigate the transition to advanced AI systems. Some examples of work we’ve funded include:

- Biological risk and cybersecurity benchmarks adopted by national AI safety institutes: Evaluating the risks posed by advanced models requires standardized tools to assess their potential to cause harm. We supported Percy Liang at Stanford University to create Cybench, which has become a key fixture in pre-deployment evaluations to test for cybersecurity risks in frontier AI models. We’ve also supported LAB-Bench, which is used in pre-deployment evaluations to test for biological risks in frontier models.

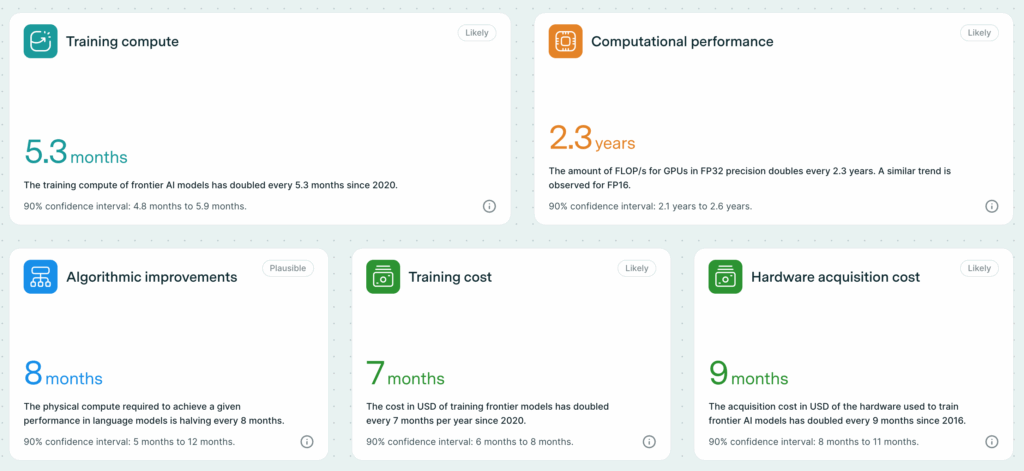

- High-quality public data on compute, scaling, and model trends: Public understanding of AI progress depends on reliable public data about frontier models, but there’s often little commercial incentive to produce that data. Epoch AI, a grantee of ours founded in 2022, has helped fill the gap by producing rigorous, public-facing analysis on major trends in AI capabilities. Their work has been praised by Yoshua Bengio, Dwarkesh Patel, and Nat Friedman, who says they do “the most thoughtful and best-researched survey work in the industry.” They have been cited by many major newspapers, as well as the U.K. government and the 2025 International AI Safety Report. They also received a 2024 Good Tech Award from Kevin Roose at The New York Times.

- Public dashboards and datasets mapping global AI activity: Governments and researchers need reliable tools to track where AI and semiconductor supply chains develop across countries and companies. We’ve supported the Center for Security and Emerging Technology at Georgetown University, which compiles detailed data on AI investment, semiconductors, and governance efforts for use by policymakers, researchers, and journalists.

Second, philanthropy can accelerate the development of technical and policy safeguards against risks from AI. Some examples of work we’ve funded include:

- Early R&D to guard against loss-of-control risks from advanced AI: We’ve supported Redwood Research, which has pioneered work to mitigate loss-of-control risks from advanced AI, so that safety protocols remain effective even if models actively try to subvert them. This work is now taking place at institutions like the UK AI Security Institute, and we think it is among the most promising research directions for reducing the risk of catastrophic outcomes.

- Formal verification tools to make AI-written code safer: As AI systems are increasingly used to write and verify code, we’ll need new infrastructure to ensure that their outputs are reliable in high-stakes settings. We’ve supported Theorem, originally a research nonprofit and now a Y Combinator-backed startup, to develop scalable formal verification methods that help ensure AI-generated code is correct by design.

- Early-stage policy research on AI hardware and model security: Addressing national security risks from frontier AI will require better tools for hardware control and model weight protection. We’ve supported think tanks like the Center for New American Security and RAND to explore technical approaches to AI security, including on-chip enforcement mechanisms and comprehensive threat modeling for model weight security.

Third, philanthropy can help build the capacity of people and institutions aiming to reduce risks from AI. Some examples of work we’ve funded include:

- Scholarships and career transition support for technical AI talent: Supporting qualified researchers to pursue Ph.D.s or shift into AI safety full-time is one of the most effective ways to grow the pool of technical talent working on the problem. We have offered scholarships and career transition grants to researchers who have gone on to do foundational work in technical AI safety, including launching new research organizations and contributing to leading safety agendas.

- Expert-led AI safety training programs with alumni at OpenAI, Anthropic, and DeepMind: Finding mentors and early research opportunities is often a roadblock for researchers entering the field. We’ve supported ML Alignment and Theory Scholars (MATS), an organization that runs intensive seminars and research programs connecting early-career researchers with expert mentors in AI safety, interpretability, and governance. MATS reports that 80% of the program’s alumni go on to work in AI safety and security, including at AI companies and in the U.S. government.

- 4,500+ students and professionals enrolled in AI safety courses: Increasing the number of people who can work productively on AI safety and security requires strong “top of the funnel” programs that can help people quickly understand the landscape and find ways to contribute. We’ve supported BlueDot Impact to build and deliver virtual courses that have trained over 4,500 professionals, including staff now working at the United Nations, the UK AI Safety Institute, and major AI companies.

Across these theories of change, there are many more concrete opportunities to make a difference now than in the recent past. This is partially because, as we explained in Part 1, new AI capabilities have given safety researchers useful tools for safety-related activities such as scaling formal verification techniques and improving security systems. Additionally, AI progress has significantly increased the number of qualified researchers interested in safety; it has also increased political appetite for related advocacy and communications, leading to many new promising initiatives.

Our partnerships team advises over 20 individual donors who are giving significant amounts to AI safety and security. We are eager to work with more.

If you are a funder looking to give ≥$250,000/year, please reach out to partnerwithus@coefficientgiving.org. We can:

- Construct bespoke portfolios of giving opportunities on a quarterly or annual basis that align with your interests and preferences.

- Help you evaluate giving opportunities, make sense of the broader landscape, and get updated on developments in the field.

- Connect you with relevant pooled funds (such as Longview Philanthropy and the AI Safety Tactical Opportunities Fund) that facilitate easy/one-stop-shop giving in this space.

Open Philanthropy has worked on AI safety and security for nearly a decade, long before it was widely recognized as a global priority. Our team includes some of the world’s leading experts on AI safety philanthropy. If you are curious to hear about specific opportunities for philanthropic impact in this space, we are eager to be a resource. Please don’t hesitate to reach out.